I was given an assignment for work to do a deep dive into the documents that surfaced as a result of the antitrust lawsuit against Google that ended its evidentiary phase of court back in November of 2023.

What I thought would be an incredibly dry and technical bit of research ended up unfolding some interesting information; some of the information affirmed some long-held SEO beliefs, some of it got a bit of a nod and understanding, and some of it shocked me a bit.

The rest, well.. was pretty dry and technical. I’ll give myself half a point for that one.

I’m not going to dig deeply into the mechanisms at play and the technical information itself (AJ Kohn already did a great job at that), but instead offer some takeaways and conclusions I’ve come to after mulling on this information in conjunction with a decade of SEO experience.

The goal is to read between the lines a bit and aim for more practical than technical.

Let’s dig in.

Table of Contents

- Backstory: the case itself and how we got here

- The mobile experience isn’t given the prioritization it should

- Quality Rater Guidelines should be mandatory reading for SEOs

- Traditional marketing tactics are still important in SEO

- Everything starts and ends with the user

- Wrapping up

Backstory: the case itself and how we got here

The gist of the case: the government filed a lawsuit intending to rein in some of the biggest companies in tech. As these companies grow larger and larger, there’s increasing concern about monopolies as they adopt more product offerings, gain additional market share, and ultimately become more powerful. All this extra power comes with the possibility of abusing said power, which is one of the concerns the case aimed to address.

As part of this trial, Pandu Nayak – the Vice President of Search at Google – was a witness. He’d been with Google for quite some time, and as his position might insinuate, is very familiar with the way Google’s search results are put together and displayed.

His testimony (which you can view here in full if you’d like) ultimately revealed a handful of mechanisms of search that have been speculated but rarely confirmed.

That’s how we got here. Now for the takeaways.

The mobile experience isn’t given the prioritization it should

Digital marketers, at least in my experience, tend to focus new internet experiences (outside of apps, of course) on desktop users.

Designs? We’re typically presented the desktop version for initial judging.

Testing? Sure, mobile is tested there at some point, but it’s often a bit of an afterthought.

Site speed? Ok, I admit, this is an area where we do often pay a little more attention, but most agencies will still focus on desktop as a matter of priority.

Why?

We’re working predominantly on desktops, and many of us grew up accessing the internet from a desktop. It’s a bias many of us have built into ourselves over time. Desktop versions are also easier to present, comment on, and analyze without the need for emulators or other hardware.

We need to break this bias.

The transcript itself has mentioned that mobile versus desktop results can differ because of the insinuated intent while on a mobile device, but that’s not the main point I want to call attention to here.

Most SEOs are familiar with the Quality Rater Guidelines. They’re a set of guidelines that humans use to score websites, which then are subsequently used to fine-tune some of the machine learning algorithms. More on this later.

Here’s an interesting tidbit from the testimony of one of Google’s expert witnesses, Prof. Edward Fox:

“Google made a decision some years ago to do all the rater experiments with mobile.“

SEOs already knew about mobile-first indexing as a pursuit with Google. That’s old news.

What’s new here – or new to me anyway – is that these quality raters who are judging the quality of each page they visit and assigning them scores that’ll then go on to have a tremendous impact on algorithms are not even seeing the desktop versions of the sites they look at.

From a design and attention perspective, we typically add all the extra bells and whistles to our desktop versions of sites with mobile considered a bit more of an afterthought.

Have a page that’s super useful and demonstrates great expertise, experience, authority, and trust using neat desktop features that aren’t present on mobile? They won’t be considered as heavily.

Note: If they’re in the code and visible, then yes they’ll be crawled, but that doesn’t help you too terribly much if a quality rater can’t see it if your page is up for consideration.

My takeaway: we should be giving much more emphasis to mobile from the offset than many companies and consultants currently do.

Quality Rater Guidelines should be mandatory reading for SEOs

Alright, I’ll admit, this isn’t as hot of a take as I might think it is, but raise your hand if you’ve read the Quality Rater Guidelines from beginning to end. I’ll wait.

I’m (metaphorically, anyway) not seeing many hands raised. I’ll also confess that mine isn’t up either – for now. Digging into that (admittedly grueling and fairly long) document is one of the next things on my ever-growing to-do list.

The thing is, this shouldn’t be shocking, right?

Google provides a list of guidelines for Quality Raters to rank websites with. We know this data is important, and the antitrust case testimony and documents have revealed that nearly everything Google does from an algorithm and machine learning standpoint incorporates this data.

Here’s a quote from Pandu emphasizing this point a little further as to why it would be a bad idea to take the human element out of this equation:

“I think it’s risky for Google — or for anyone else, for that matter, to turn over everything to a system like these deep learning systems as an end-to-end top-level function. I think it makes it very hard to control.”

Pandu Nayak

So the human part of this equation – which again is factored into all of the machine learning elements – doesn’t seem to be going anywhere for the moment.

Note: I’m not sure how this will come into play with things like SGE, which is notably missing some of the human element there and tends to be somewhat of a black box for understanding where the links came from, as is the case with nearly all genAI at the moment.

Luckily for us, the guidelines that humans use to rate these sites, and subsequently tune the models, are not only visible to us, but highly accessible too.

My takeaway: All SEOs should make a point to read the Quality Rater Guidelines, and the findings in this case only make that all the more important. More emphasis should be placed on spotting changes to them too. Seriously, grab yourself a nice warm drink, get some comfy clothes, and really sit down and dig through them. That’s my plan, feel free to borrow it if you’d like.

Traditional marketing tactics are still important in SEO

SEO is a category under digital marketing. Digital marketing is a category under marketing. This isn’t exactly revelatory.

With that said, we as SEOs need to maintain the idea in our heads that traditional marketing tactics are complementary to SEO pursuits. You know the line: write for humans, not for robots.

So why is this a takeaway?

Well, part of Pandu’s testimony spent some time going over Navboost and how it functions. Navboost is a machine learning algorithm that takes the last 13 months of click data (previously 18) across billions of clicks to help determine what it is that users are actually clicking on.

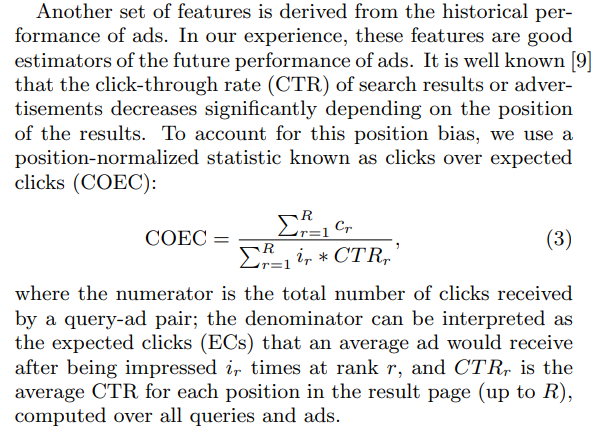

In this article by AJ Kohn, Navboost is described as using a model based on COEC (clicks over expected clicks).

We’re going to get technical for a minute, bear with me.

COEC as described in this Yahoo! paper looks a bit like this:

To vastly oversimplify: think of this like a weighted clickthrough rate.

Basically, this equation takes the expected number of clicks, compensating for things like lower positions on the page (lower position = fewer clicks), and compares it to the actual number of clicks received.

If the actual clickthrough rate is higher than we would have expected, then it stands to reason that users preferred that result more, as the calculation would show that it overperformed against what was expected. This can lead to rankings increases.

Here’s where things get interesting.

Ask yourself a quick question: what are some reasons that a page would perform with a better clickthrough rate than would be expected?

There are two in particular I’d like to focus on:

Brand recognition: People are more likely to click on a brand they already have a positive association with.

Copy: A well-written and enticing title or meta description may have swayed a user to click that result instead of a competitor.

To bring this home and stop circling around the point, both brand recognition and copywriting are elements of traditional marketing.

Brand recognition

Brand recognition isn’t typically something accomplished via SEO alone. If we think about Navboost as a big signal that’s reliant on past data, then we run into a bit of a chicken-or-the-egg situation.

Users won’t be as enticed to click on a result that they’re unfamiliar with, which means you get no click data, which means you have no data to algorithmically feed your ranking, which means you don’t show up.

How do we build brand recognition? Traditional marketing tactics. Social media, newsletters, user testing, ads, the list goes on and on.

Is it possible to build brand recognition through SEO alone? Maybe, with a lot of time and persistence, but ultimately brands that establish themselves and develop brand recognition are going to have a massive, massive advantage.

This is partly why we see big brands like Forbes showing up for searches we wouldn’t necessarily expect them to. They’ve developed a brand, and users are more likely to click the name they recognize.

Copywriting

Another traditional marketing tactic, copywriting is highlighted in this particular interaction thanks to its ability to entice a click.

Now, it goes without saying that Google’s got numerous other algorithms and ranking factors at play to dissuade blatant clickbait headlines; if they didn’t, our SERPs would look like a Buzzfeed index, and they (usually) don’t.

But that doesn’t mean that there isn’t power in evocative copywriting.

Titles and meta descriptions are what users quickly skim through when they’re presented with a SERP. It stands to reason that this is one of your biggest chances to hook their attention and draw them into your site.

How? With effective copy.

Meta descriptions in particular are usually dismissed as a ranking factor among SEOs that have been doing the job for a long time, and that’s fairly reasonable – sometimes Google decides to overwrite them completely in favor of something else they want to show.

But in the instances that they don’t, that’s a chance for you to entice that click.

Remember: more clicks = more data = higher rankings.

So what’s the point?

The point that I’m making here is that while SEO is a subset of marketing, you can’t do it well without traditional marketing tactics too.

An effective SEO should spend some more time digging into the fundamentals of copywriting, or working closely with the creative teams doing that work if you have one.

Yes, we want to get our keywords into the title for our glorious rankings, but if nobody clicks on our cleverly keyword-stuffed titles, we won’t get rankings to begin with.

Likewise, we should be collaborating with other branches of marketing too in an attempt to help build up brand recognition. The more effective your ads team is, or your social media team, or whatever other team helps to get the brand name out there in front of customers, the more successful your SEO will be.

My takeaway: Lean into traditional marketing. Dust off your college textbooks on copywriting. At the very least try to stay informed about what other marketing is going on to get your brand’s name out there. These pursuits and SEO are symbiotic; when one succeeds, they all succeed.

Everything starts and ends with the user

This isn’t (or at least I hope it isn’t) a surprise to SEOs, but it’s a fact that can get lost in the weeds when we’re digging into technical elements, or when we’ve been doing this so long that we miss the forest for the trees.

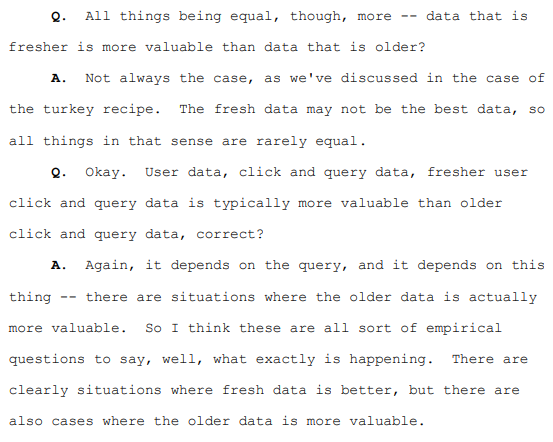

Take this excerpt discussing the importance of freshness for example:

The turkey recipe refers to an example given regarding instructions on how to cook a turkey – they haven’t changed too much over the years, and there’s a good chance that it was nailed perfectly a long time ago.

As a user, I don’t want the latest home cook’s insight on how to cook a turkey just because it was posted last week. I want Julia Child’s tried-and-true method that turns out deliciously buttery and great every time.

We get so deep in the weeds on our products and websites that we often neglect to consider the average user, which is a terrible mistake.

All of Google’s algorithms come into play at some point to try and dig down into the nitty gritty of what a user is actually looking for. These court documents revealed:

Is your user searching for a local place near them? RankBrain will interpret that and help to ensure local results are prioritized.

Is your user searching for a more common term than the technical one you’ve presented? DeepRank will play a role in understanding the more common sense language as opposed to taking things so literally.

Does your user want the most updated information on something unfolding right now? Instant Glue will help to assemble that for them.

Users shape the internet

Considering the amount of user click data that goes into training these algorithms, users also help to shape the content that ranks too.

Here’s an example:

Let’s say that user data is showing a preference for comprehensive guides in response to a particular query. They spend longer sessions on these comprehensive pages, bucking the trend of the expected number of clicks they were supposed to receive.

Over time, rankings will start to mirror user behavior in serving up comprehensive guides at higher and higher rankings.

SEOs pick up on this, start advising towards comprehensive guides and steering things in that direction, which in turn sees a proliferation of comprehensive guides everywhere.

Every user click and interaction creates a feedback loop that lets Google adapt, learn, and ultimately shape what we see on the internet.

My takeaway: If ever you’re in a spot where you’re stuck and need to figure out how to handle something, think about the average user. What would be helpful to them? What are they likely to click? Use this concept to ground your ideas.

Wrapping up

When I’m asked what my takeaways were from the documents revealed through the antitrust case, my answer isn’t necessarily any sort of groundbreaking super-secret algorithm spill.

Sure, we now know the internal names of some algorithms that have already been floating around. We also know how imperative Quality Raters are, and we know that an abundance of user data allows Google to try and predict the future with learnings from the past.

We know that Google conducts tests to measure up against Bing – but that’s just standard competition stuff.

We know that vetted news sites are crawled by Google at a high (frankly crazy) rate to try and compensate for updated and relevant information to have the most up-to-date info for users.

We know that live experiments are carried out at a scale that is difficult to imagine for anyone who’s ever played a role in product experimenting.

But to me, most of that seems like filler, or little trivia nuggets that are good to tuck into the back of your mind.

My main takeaways, and the ones that will stick with me going forward, are the ones that center on the practical. The human element. Humans are the ones shaping the internet, and as an SEO it’s easy to get too into the weeds with technical considerations, keyword density, all of it.

Now, if you’ll excuse me, I’ve got a dramatically large PDF of the Quality Rater Guidelines to get to reading.

Leave a Reply